Operating System - Learn Thread with C/C++, Python

Learn Thread with C/C++, Python

What is Thread ?

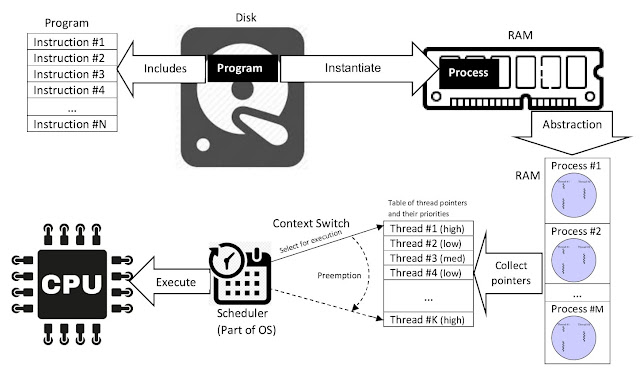

Thread is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. Multiple threads can reside in a process, executing concurrently and sharing resources such as memory. While processes do not share resources, thread share resources in a process, and this leads to smaller overhead between context switching compared to processes.

|

| Program VS Process VS Thread |

Comparing process with thread

Process

- Heavyweight unit of kernel scheduling due to their resources

- Resources include memory, file handles, sockets, device handles, windows and PCB

- Creating or destroying a process is relatively expensive as resources must be acquired or released.

Thread

Kernel Thread

- Lightweight unit of kernel scheduling

- Threads share resources if they are within a same process.

- Threads do not own resources except for a stack, a copy of the registers, program counter, and thread-local storage.

- Creating or destroying a thread is relatively cheap because their context switch will only require saving and restoring registers and stack pointers which are not shared.

User Thread

- When threads are implemented in user space libraries, it is called user thread.

- Context switch between user threads is very efficient in that they do not need interaction with the kernel at all. Context switch can be performed by locally saving current thread's CPU registers and loading the upcoming thread's registers.

- Blocking system calls by the user threads can be problematic because the kernel will block the entire process when system call is made. This can be solved by using I/O API that implements an interface that blocks the calling thread, rather than the entire process, by using non-blocking I/O internally, and scheduling another user thread or fiber while the I/O operation is in progress.

Thread and data Synchronization

- When more than one thread is trying to access the same data, this is called a race condition. This will case the program to act in unexpected way.

- To prevent race condition, we can use mutex to lock data structures against concurrent access.

Multi-threading using mutex to prevent race condition - C/C++

Thread pools

- A set number of threads are created at startup into a thread pool and wait for a task to be assigned. When new task arrives, it wakes up, completes the task and goes back to waiting state.

- This avoids the relatively expensive thread creation and destruction functions for every task performed and takes thread management out of the application developer's hand and leaves it to a library or the operating system that is better suited to optimize thread management.

Multi-threading with Python (thread pools)

This codes are referenced from

here. You can switch to multi_threading by using the main_multithreading() function.

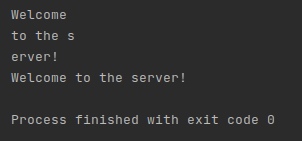

Now let's look at the result when we are not using multi threading.

REFERENCE

https://www.blogger.com/blog/posts/3037945744859167620

Comments

Post a Comment